A world filled with stimuli.

We carry the world’s information in our pocket, yet struggle to navigate the constant stream of content. We are at a pivotal moment in human history where knowledge is abundant, but lacks curation. How can computers enhance our capabilities while nurturing our digital wellness? It is time to move beyond the information era.

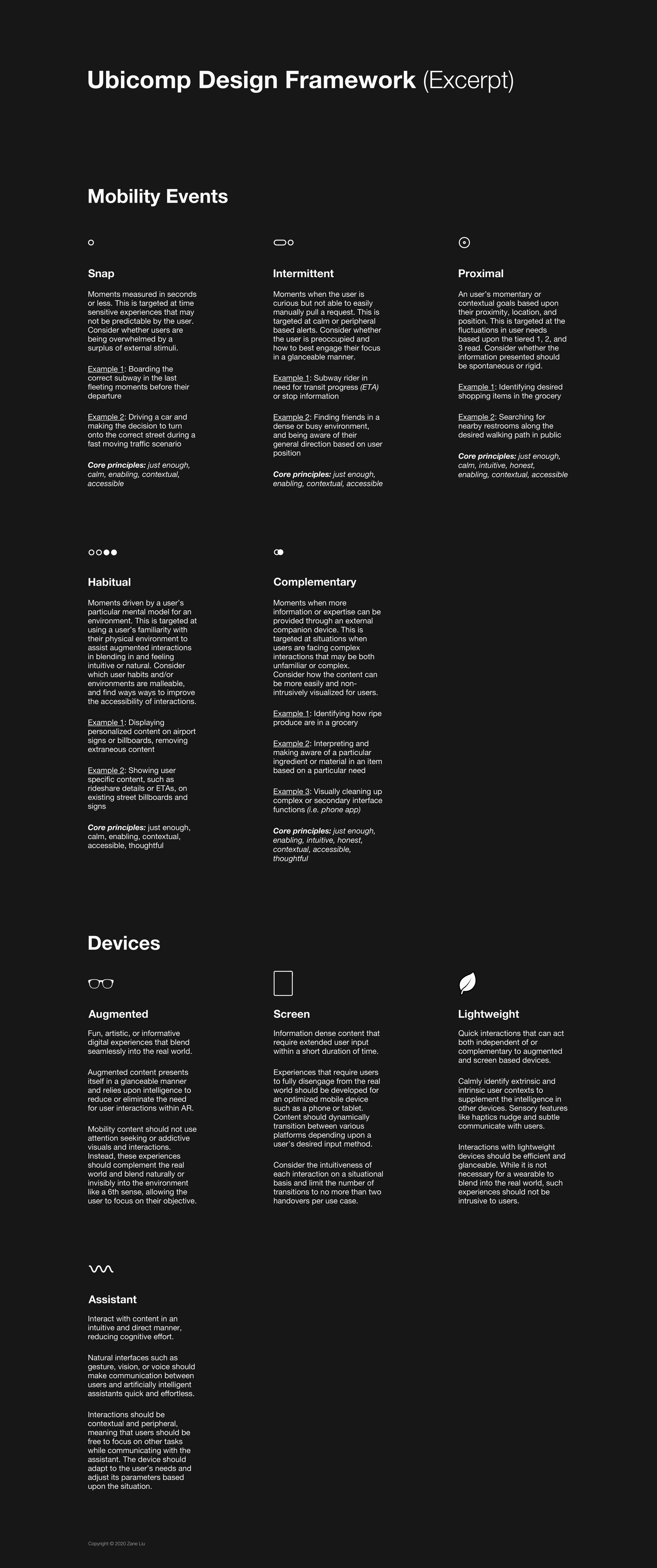

Goldilocks aims to harness the power of ubiquitous computing and artificial intelligence, inviting a new era of wearable computers. This evolution reshapes our interactions with the world at every moment, making technology imperceptible and our lives effortless.

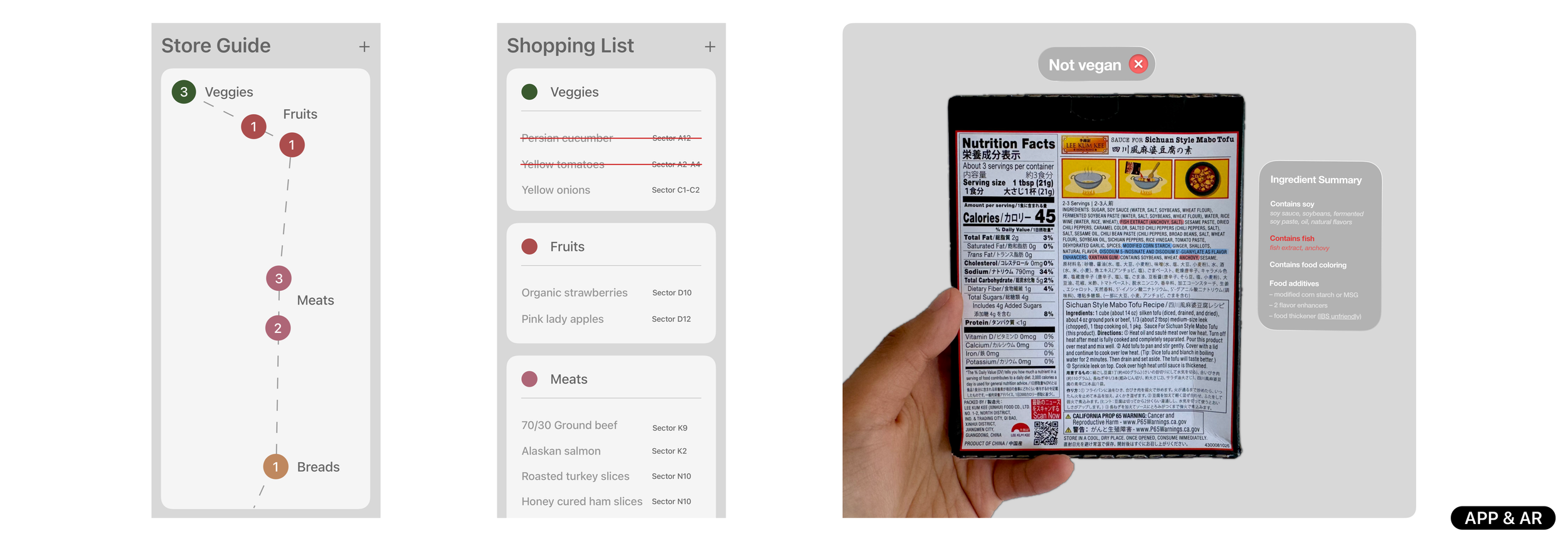

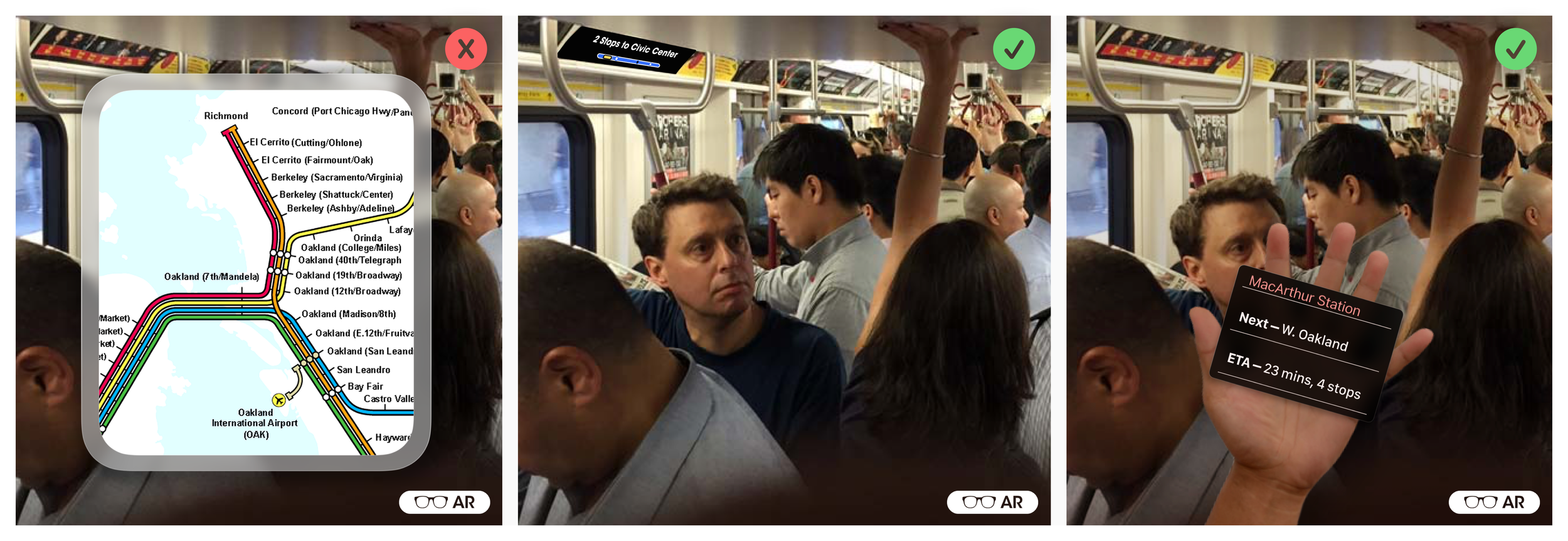

Interfaces that reduce visual noise. Our world was designed to provide the maximum amount of information to assist the most people. This can feel overwhelming.

Spatial computers can help us filter busy environments so we can focus our attention on what it is most helpful.

Interfaces that learn and adapt. Spatial computers are real world translators. They help make the world clear and intuitive.

This can be particularly helpful if your vision or hearing is limited. Not only can physical spaces become more accessible, they can be curated to the needs of each individual.